The k-means clustering algorithm works by finding like groups based on Euclidean distance, a measure of distance or similarity. The practitioner selects k groups to cluster, and the algorithm finds the best centroids for the k groups. The practitioner can then use those groups to determine which factors group members relate. For customers, these would be their buying preferences. Clustering is nothing but automated groupbys. With some effort you can create better clusters manually, but you can also just let the data guide you.

import pandas as pd

customers = pd.read_excel("data/bikeshops.xlsx", sheet=1)

products = pd.read_excel("data/bikes.xlsx", sheet=1)

orders = pd.read_excel("data/orders.xlsx", sheet=1)

df = pd.merge(orders, customers, left_on="customer.id", right_on="bikeshop.id")

df = pd.merge(df, products, left_on="product.id", right_on="bike.id")

Now the data frame that simulates output we would get from an SQL query of a sales orders database / ERP system

Around here is where you should formulat a question. I would want to investigate the type of customers interested in Cannondale. I apriori believe that Cannondale customers put function of form, they like durable products and that they are after a strong roadbike at a reasonable price. Now we have to think of a unit to cluster on. I think quantity is foundatation and easily interpretable, so I will cluster on that. Something like value is both the function of quantity and price so you would.’t want to cluster on that. Maybe avervage price as it ignores or dampen the effect of quantity.

The bike shop is the customer. A hypothesis was formed that bike shops purchase bikes based on bike features such as unit price (high end vs affordable), primary category (Mountain vs Road), frame (aluminum vs carbon), etc. The sales orders were combined with the customer and product information and grouped to form a matrix of sales by model and customer.

df = df.T.drop_duplicates().T

df["price.extended"] = df["price"] * df["quantity"]

df = df[["order.date", "order.id", "order.line", "bikeshop.name", "model",

"quantity", "price", "price.extended", "category1", "category2", "frame"]]

df = df.sort_values(["order.id","order.line"])

df = df.fillna(value=0)

df = df.reset_index(drop=True)

## You can easily melt which seems to be anothre

#melt()

## I think melt reverses the pivot_table.

## summarise in R is arg, spread is pivot_table/melt

df["price"] = pd.qcut(df["price"],2)

merger = df.copy()

df = df.groupby(["bikeshop.name", "model", "category1", "category2", "frame", "price"]).agg({"quantity":"sum"}).reset_index().pivot_table(index="model", columns="bikeshop.name",values="quantity").reset_index().reset_index(drop=True)

df.head()

| bikeshop.name | model | Albuquerque Cycles | Ann Arbor Speed | Austin Cruisers | Cincinnati Speed | Columbus Race Equipment | Dallas Cycles | Denver Bike Shop | Detroit Cycles | Indianapolis Velocipedes | ... | Philadelphia Bike Shop | Phoenix Bi-peds | Pittsburgh Mountain Machines | Portland Bi-peds | Providence Bi-peds | San Antonio Bike Shop | San Francisco Cruisers | Seattle Race Equipment | Tampa 29ers | Wichita Speed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Bad Habit 1 | 5.0 | 4.0 | 2.0 | 2.0 | 4.0 | 3.0 | 27.0 | 5.0 | 2.0 | ... | 6.0 | 16.0 | 6.0 | 7.0 | 5.0 | 4.0 | 1.0 | 2.0 | 4.0 | 3.0 |

| 1 | Bad Habit 2 | 2.0 | 6.0 | 1.0 | NaN | NaN | 4.0 | 32.0 | 8.0 | 1.0 | ... | 1.0 | 27.0 | 1.0 | 7.0 | 13.0 | NaN | 1.0 | 1.0 | NaN | NaN |

| 2 | Beast of the East 1 | 3.0 | 9.0 | 2.0 | NaN | NaN | 1.0 | 42.0 | 6.0 | 3.0 | ... | NaN | 18.0 | 2.0 | 7.0 | 5.0 | 1.0 | NaN | 2.0 | 2.0 | NaN |

| 3 | Beast of the East 2 | 3.0 | 6.0 | 2.0 | NaN | 2.0 | 1.0 | 35.0 | 3.0 | 3.0 | ... | NaN | 33.0 | 4.0 | 10.0 | 8.0 | 2.0 | 1.0 | 3.0 | 6.0 | 1.0 |

| 4 | Beast of the East 3 | 1.0 | 2.0 | NaN | NaN | 1.0 | 1.0 | 39.0 | 6.0 | NaN | ... | 5.0 | 23.0 | 1.0 | 13.0 | 4.0 | 6.0 | NaN | 1.0 | 2.0 | NaN |

5 rows × 31 columns

rad = pd.merge(df, merger.drop_duplicates("model"), on="model", how="left")

rad.price.extended = rad.price

rad = rad.drop(["order.date","order.id","order.line","bikeshop.name","quantity","price.extended"],axis=1)

non_cat = list(rad.select_dtypes(exclude=["category","object"]).columns)

cat = list(rad.select_dtypes(include=["category","object"]).columns)

rad[non_cat] = rad[non_cat].fillna(value=0)

rad[rad.columns.difference(cat)] = rad[rad.columns.difference(cat)]/rad[rad.columns.difference(cat)].sum()

Now we are ready to perform k-means clustering to segment our customer-base. Think of clusters as groups in the customer-base. Prior to starting we will need to choose the number of customer groups, k, that are to be detected. The best way to do this is to think about the customer-base and our hypothesis. We believe that there are most likely to be at least four customer groups because of mountain bike vs road bike and premium vs affordable preferences. We also believe there could be more as some customers may not care about price but may still prefer a specific bike category. However, we’ll limit the clusters to eight as more is likely to overfit the segments. KMeans is really for something that has attributes

Dendogram shows the distance between any two observations in a dataset. The vertical axis determines the distance. The longer the axis, the larger the distance.

%matplotlib inline

import matplotlib.cm as cm

import seaborn as sn

from sklearn.cluster import KMeans

cmap = sn.cubehelix_palette(as_cmap=True, rot=-.3, light=1)

sn.clustermap(rad.iloc[:,1:-4:].T.head(), cmap=cmap, linewidths=.5)

<seaborn.matrix.ClusterGrid at 0x10ab5c208>

cluster_range = range(1, 8)

cluster_errors = []

for num_clusters in cluster_range:

clusters = KMeans( num_clusters )

clusters.fit(rad.iloc[:,1:-4:].T)

cluster_errors.append( clusters.inertia_ )

clusters_df = pd.DataFrame( { "num_clusters":cluster_range, "cluster_errors": cluster_errors } )

clusters_df

| cluster_errors | num_clusters | |

|---|---|---|

| 0 | 0.184222 | 1 |

| 1 | 0.142030 | 2 |

| 2 | 0.121164 | 3 |

| 3 | 0.103948 | 4 |

| 4 | 0.097594 | 5 |

| 5 | 0.089718 | 6 |

| 6 | 0.083149 | 7 |

import matplotlib.pyplot as plt

plt.figure(figsize=(12,6))

plt.plot( clusters_df.num_clusters, clusters_df.cluster_errors, marker = "o" )

[<matplotlib.lines.Line2D at 0x1a12105c50>]

Clearly after 4 nothing much happens. You will overfit if you move further away than the elbow.

I juat compared the last two feature against eachother

You have to label encode them for below to work

from sklearn import preprocessing le = preprocessing.LabelEncoder()

for col in rad: if rad[col].dtype == ‘object’ or rad[col].dtype.name == ‘category’: rad[col] = le.fit_transform(rad[col])

from sklearn.metrics import silhouette_samples, silhouette_score

import numpy as np

cluster_range = range( 2, 7 )

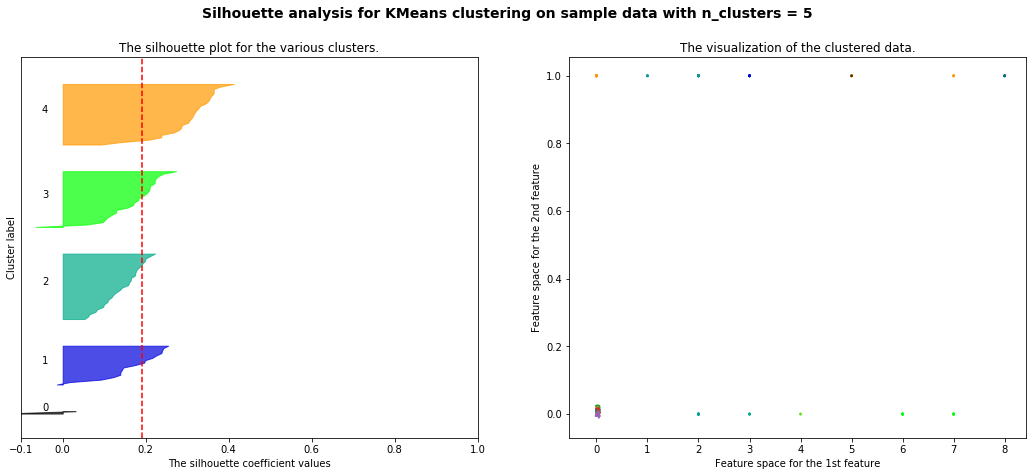

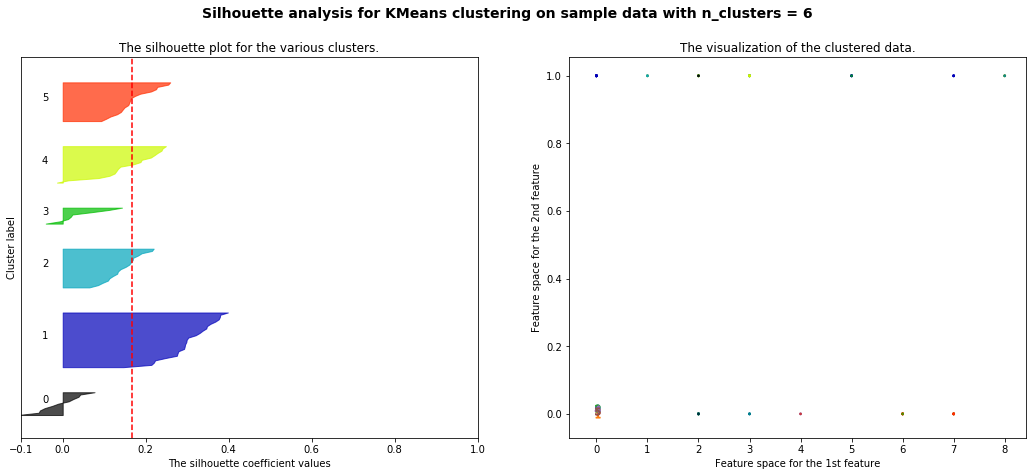

for n_clusters in cluster_range:

# Create a subplot with 1 row and 2 columns

fig, (ax1, ax2) = plt.subplots(1, 2)

fig.set_size_inches(18, 7)

# The 1st subplot is the silhouette plot

# The silhouette coefficient can range from -1, 1 but in this example all

# lie within [-0.1, 1]

ax1.set_xlim([-0.1, 1])

# The (n_clusters+1)*10 is for inserting blank space between silhouette

# plots of individual clusters, to demarcate them clearly.

ax1.set_ylim([0, len(rad.iloc[:,1:-4:]) + (n_clusters + 1) * 10])

# Initialize the clusterer with n_clusters value and a random generator

# seed of 10 for reproducibility.

clusterer = KMeans(n_clusters=n_clusters, random_state=10)

cluster_labels = clusterer.fit_predict( rad.iloc[:,1:-4:] )

# The silhouette_score gives the average value for all the samples.

# This gives a perspective into the density and separation of the formed

# clusters

silhouette_avg = silhouette_score(rad.iloc[:,1:-4:], cluster_labels)

print("For n_clusters =", n_clusters,

"The average silhouette_score is :", silhouette_avg)

# Compute the silhouette scores for each sample

sample_silhouette_values = silhouette_samples(rad.iloc[:,1:-4:], cluster_labels)

y_lower = 10

for i in range(n_clusters):

# Aggregate the silhouette scores for samples belonging to

# cluster i, and sort them

ith_cluster_silhouette_values = \

sample_silhouette_values[cluster_labels == i]

ith_cluster_silhouette_values.sort()

size_cluster_i = ith_cluster_silhouette_values.shape[0]

y_upper = y_lower + size_cluster_i

color = cm.spectral(float(i) / n_clusters)

ax1.fill_betweenx(np.arange(y_lower, y_upper),

0, ith_cluster_silhouette_values,

facecolor=color, edgecolor=color, alpha=0.7)

# Label the silhouette plots with their cluster numbers at the middle

ax1.text(-0.05, y_lower + 0.5 * size_cluster_i, str(i))

# Compute the new y_lower for next plot

y_lower = y_upper + 10 # 10 for the 0 samples

ax1.set_title("The silhouette plot for the various clusters.")

ax1.set_xlabel("The silhouette coefficient values")

ax1.set_ylabel("Cluster label")

# The vertical line for average silhoutte score of all the values

ax1.axvline(x=silhouette_avg, color="red", linestyle="--")

ax1.set_yticks([]) # Clear the yaxis labels / ticks

ax1.set_xticks([-0.1, 0, 0.2, 0.4, 0.6, 0.8, 1])

# 2nd Plot showing the actual clusters formed

colors = cm.spectral(cluster_labels.astype(float) / n_clusters)

ax2.scatter(rad.iloc[:, -2], rad.iloc[:, -1], marker='.', s=30, lw=0, alpha=0.7,

c=colors)

# Labeling the clusters

centers = clusterer.cluster_centers_

# Draw white circles at cluster centers

ax2.scatter(centers[:, 0], centers[:, 1],

marker='o', c="white", alpha=1, s=200)

for i, c in enumerate(centers):

ax2.scatter(c[0], c[1], marker='$%d$' % i, alpha=1, s=50)

ax2.set_title("The visualization of the clustered data.")

ax2.set_xlabel("Feature space for the 1st feature")

ax2.set_ylabel("Feature space for the 2nd feature")

plt.suptitle(("Silhouette analysis for KMeans clustering on sample data "

"with n_clusters = %d" % n_clusters),

fontsize=14, fontweight='bold')

plt.show()

For n_clusters = 2 The average silhouette_score is : 0.19917062862266718

For n_clusters = 3 The average silhouette_score is : 0.17962478496274137

For n_clusters = 4 The average silhouette_score is : 0.18721328745339205

For n_clusters = 5 The average silhouette_score is : 0.19029963290478452

For n_clusters = 6 The average silhouette_score is : 0.1665540459580535

At 4 they are all about the same size, crossing the average line. At 4 number off clusters, the cluster sizes are fairly homogeneous. And only a few observations are assigned to wrong cluster and almost all clusters have observations that are more than the average Silhouette score.

## Start the clusters here.

clusters = KMeans(4)

clusters.fit(rad.iloc[:,1:-4:].T)

#cluster_errors.append( clusters.inertia_ )

centroids = clusters.cluster_centers_

clusters = KMeans(4)

clusters.fit(rad.iloc[:,1:-4:])

labels = clusters.predict(rad.iloc[:,1:-4:])

# Centroid values

labels

array([0, 0, 0, 0, 0, 2, 2, 1, 2, 1, 2, 2, 2, 2, 2, 2, 0, 0, 0, 0, 0, 0,

0, 3, 3, 0, 3, 3, 3, 0, 0, 0, 0, 3, 3, 3, 3, 3, 3, 3, 3, 3, 0, 3,

3, 3, 3, 3, 3, 3, 3, 3, 3, 2, 1, 1, 2, 2, 2, 1, 2, 2, 2, 1, 1, 1,

1, 1, 1, 2, 2, 2, 2, 2, 2, 1, 1, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 2,

0, 0, 0, 0, 0, 3, 3, 3, 3], dtype=int32)

centroids.shape

(4, 97)

## IF I had to traspose, I could've sworns

rad.iloc[:,1:-4:].shape

(97, 30)

rad.head()

| model | Albuquerque Cycles | Ann Arbor Speed | Austin Cruisers | Cincinnati Speed | Columbus Race Equipment | Dallas Cycles | Denver Bike Shop | Detroit Cycles | Indianapolis Velocipedes | ... | Providence Bi-peds | San Antonio Bike Shop | San Francisco Cruisers | Seattle Race Equipment | Tampa 29ers | Wichita Speed | price | category1 | category2 | frame | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Bad Habit 1 | 0.017483 | 0.006645 | 0.008130 | 0.005115 | 0.010152 | 0.012821 | 0.011734 | 0.009921 | 0.006270 | ... | 0.009225 | 0.021505 | 0.002674 | 0.015625 | 0.019417 | 0.005917 | (2700.0, 12790.0] | Mountain | Trail | Aluminum |

| 1 | Bad Habit 2 | 0.006993 | 0.009967 | 0.004065 | 0.000000 | 0.000000 | 0.017094 | 0.013907 | 0.015873 | 0.003135 | ... | 0.023985 | 0.000000 | 0.002674 | 0.007812 | 0.000000 | 0.000000 | (414.999, 2700.0] | Mountain | Trail | Aluminum |

| 2 | Beast of the East 1 | 0.010490 | 0.014950 | 0.008130 | 0.000000 | 0.000000 | 0.004274 | 0.018253 | 0.011905 | 0.009404 | ... | 0.009225 | 0.005376 | 0.000000 | 0.015625 | 0.009709 | 0.000000 | (2700.0, 12790.0] | Mountain | Trail | Aluminum |

| 3 | Beast of the East 2 | 0.010490 | 0.009967 | 0.008130 | 0.000000 | 0.005076 | 0.004274 | 0.015211 | 0.005952 | 0.009404 | ... | 0.014760 | 0.010753 | 0.002674 | 0.023438 | 0.029126 | 0.001972 | (414.999, 2700.0] | Mountain | Trail | Aluminum |

| 4 | Beast of the East 3 | 0.003497 | 0.003322 | 0.000000 | 0.000000 | 0.002538 | 0.004274 | 0.016949 | 0.011905 | 0.000000 | ... | 0.007380 | 0.032258 | 0.000000 | 0.007812 | 0.009709 | 0.000000 | (414.999, 2700.0] | Mountain | Trail | Aluminum |

5 rows × 35 columns

for i, c in enumerate(centroids):

rad["Cluster "+str(i)] = list(c)

rad_final = rad.drop(list(rad.iloc[:,1:].iloc[:,:-8].columns),axis=1)

rad_final.sort_values("Cluster 0").head(10)

| model | price | category1 | category2 | frame | Cluster 0 | Cluster 1 | Cluster 2 | Cluster 3 | |

|---|---|---|---|---|---|---|---|---|---|

| 83 | Synapse Hi-Mod Disc Black Inc. | (2700.0, 12790.0] | Road | Endurance Road | Carbon | 0.003503 | 0.009168 | 0.019755 | 0.007196 |

| 63 | Supersix Evo Black Inc. | (2700.0, 12790.0] | Road | Elite Road | Carbon | 0.003620 | 0.008226 | 0.019639 | 0.015364 |

| 54 | Slice Hi-Mod Black Inc. | (2700.0, 12790.0] | Road | Triathalon | Carbon | 0.003827 | 0.016406 | 0.023494 | 0.013543 |

| 64 | Supersix Evo Hi-Mod Dura Ace 1 | (2700.0, 12790.0] | Road | Elite Road | Carbon | 0.003856 | 0.005667 | 0.023053 | 0.006798 |

| 86 | Synapse Hi-Mod Dura Ace | (2700.0, 12790.0] | Road | Endurance Road | Carbon | 0.004202 | 0.013474 | 0.021672 | 0.008991 |

| 76 | Synapse Carbon Disc Ultegra D12 | (2700.0, 12790.0] | Road | Endurance Road | Carbon | 0.004295 | 0.012610 | 0.019516 | 0.012696 |

| 40 | Jekyll Carbon 3 | (2700.0, 12790.0] | Mountain | Over Mountain | Carbon | 0.004389 | 0.013288 | 0.011734 | 0.000524 |

| 66 | Supersix Evo Hi-Mod Team | (2700.0, 12790.0] | Road | Elite Road | Carbon | 0.004652 | 0.008754 | 0.016159 | 0.013339 |

| 7 | CAAD12 Black Inc | (2700.0, 12790.0] | Road | Elite Road | Aluminum | 0.004709 | 0.004142 | 0.019471 | 0.011152 |

| 26 | F-Si Hi-Mod 1 | (2700.0, 12790.0] | Mountain | Cross Country Race | Carbon | 0.004748 | 0.013929 | 0.010030 | 0.000676 |

rad_final = rad_final.rename(columns={"Cluster 0":"Low End Road Bike Customer"})

rad_final.sort_values("Cluster 1").head(10)

| model | price | category1 | category2 | frame | Low End Road Bike Customer | Cluster 1 | Cluster 2 | Cluster 3 | |

|---|---|---|---|---|---|---|---|---|---|

| 56 | Slice Ultegra | (414.999, 2700.0] | Road | Triathalon | Carbon | 0.018821 | 0.000000 | 0.013370 | 0.022077 |

| 72 | Syapse Carbon Tiagra | (414.999, 2700.0] | Road | Endurance Road | Carbon | 0.010617 | 0.000000 | 0.010837 | 0.018366 |

| 53 | Slice 105 | (414.999, 2700.0] | Road | Triathalon | Carbon | 0.013984 | 0.000000 | 0.011002 | 0.017242 |

| 60 | SuperX Rival CX1 | (414.999, 2700.0] | Road | Cyclocross | Carbon | 0.014142 | 0.000000 | 0.010074 | 0.013548 |

| 78 | Synapse Carbon Ultegra 4 | (414.999, 2700.0] | Road | Endurance Road | Carbon | 0.013919 | 0.000000 | 0.011632 | 0.020372 |

| 58 | SuperX 105 | (414.999, 2700.0] | Road | Cyclocross | Carbon | 0.012159 | 0.000000 | 0.009596 | 0.014853 |

| 14 | CAAD8 Sora | (414.999, 2700.0] | Road | Elite Road | Aluminum | 0.015626 | 0.000000 | 0.013606 | 0.017489 |

| 69 | Supersix Evo Tiagra | (414.999, 2700.0] | Road | Elite Road | Carbon | 0.012011 | 0.000264 | 0.013232 | 0.018053 |

| 8 | CAAD12 Disc 105 | (414.999, 2700.0] | Road | Elite Road | Aluminum | 0.016751 | 0.000264 | 0.014013 | 0.015795 |

| 82 | Synapse Disc Tiagra | (414.999, 2700.0] | Road | Endurance Road | Aluminum | 0.013016 | 0.000264 | 0.008813 | 0.024608 |

rad_final = rad_final.rename(columns={"Cluster 1":"High End Road Bike Customer"})

rad_final.sort_values("Cluster 2").head(10)

| model | price | category1 | category2 | frame | Low End Road Bike Customer | High End Road Bike Customer | Cluster 2 | Cluster 3 | |

|---|---|---|---|---|---|---|---|---|---|

| 20 | F-Si 1 | (414.999, 2700.0] | Mountain | Cross Country Race | Aluminum | 0.012330 | 0.017409 | -1.734723e-18 | 0.007079 |

| 30 | Habit 4 | (2700.0, 12790.0] | Mountain | Trail | Aluminum | 0.015225 | 0.011251 | 0.000000e+00 | 0.004316 |

| 29 | Fat CAAD2 | (414.999, 2700.0] | Mountain | Fat Bike | Aluminum | 0.012995 | 0.007621 | 1.734723e-18 | 0.006693 |

| 2 | Beast of the East 1 | (2700.0, 12790.0] | Mountain | Trail | Aluminum | 0.012339 | 0.012125 | 2.670940e-04 | 0.012970 |

| 18 | Catalyst 3 | (414.999, 2700.0] | Mountain | Sport | Aluminum | 0.018260 | 0.003200 | 2.670940e-04 | 0.007307 |

| 16 | Catalyst 1 | (414.999, 2700.0] | Mountain | Sport | Aluminum | 0.014337 | 0.006794 | 3.287311e-04 | 0.008686 |

| 32 | Habit 6 | (414.999, 2700.0] | Mountain | Trail | Aluminum | 0.014141 | 0.004235 | 4.230118e-04 | 0.010441 |

| 25 | F-Si Carbon 4 | (2700.0, 12790.0] | Mountain | Cross Country Race | Carbon | 0.018444 | 0.008732 | 4.230118e-04 | 0.010026 |

| 1 | Bad Habit 2 | (414.999, 2700.0] | Mountain | Trail | Aluminum | 0.012405 | 0.004576 | 4.456328e-04 | 0.008157 |

| 88 | Trail 1 | (414.999, 2700.0] | Mountain | Sport | Aluminum | 0.015381 | 0.005553 | 6.901059e-04 | 0.013061 |

rad_final = rad_final.rename(columns={"Cluster 2":"Aluminum Mountain Bike Customers"})

rad_final.sort_values("Cluster 3").head(10)

| model | price | category1 | category2 | frame | Low End Road Bike Customer | High End Road Bike Customer | Aluminum Mountain Bike Customers | Cluster 3 | |

|---|---|---|---|---|---|---|---|---|---|

| 37 | Habit Hi-Mod Black Inc. | (2700.0, 12790.0] | Mountain | Trail | Carbon | 0.007331 | 0.011520 | 0.008958 | 0.000316 |

| 38 | Jekyll Carbon 1 | (2700.0, 12790.0] | Mountain | Over Mountain | Carbon | 0.008027 | 0.018505 | 0.008085 | 0.000487 |

| 40 | Jekyll Carbon 3 | (2700.0, 12790.0] | Mountain | Over Mountain | Carbon | 0.004389 | 0.013288 | 0.011734 | 0.000524 |

| 34 | Habit Carbon 2 | (2700.0, 12790.0] | Mountain | Trail | Carbon | 0.005371 | 0.023375 | 0.007472 | 0.000528 |

| 52 | Scalpel-Si Race | (2700.0, 12790.0] | Mountain | Cross Country Race | Carbon | 0.011713 | 0.009638 | 0.012867 | 0.000569 |

| 26 | F-Si Hi-Mod 1 | (2700.0, 12790.0] | Mountain | Cross Country Race | Carbon | 0.004748 | 0.013929 | 0.010030 | 0.000676 |

| 39 | Jekyll Carbon 2 | (2700.0, 12790.0] | Mountain | Over Mountain | Carbon | 0.006515 | 0.021079 | 0.010311 | 0.000744 |

| 35 | Habit Carbon 3 | (2700.0, 12790.0] | Mountain | Trail | Carbon | 0.007421 | 0.008960 | 0.011745 | 0.000785 |

| 49 | Scalpel-Si Carbon 3 | (2700.0, 12790.0] | Mountain | Cross Country Race | Carbon | 0.005525 | 0.034269 | 0.012994 | 0.000800 |

| 51 | Scalpel-Si Hi-Mod 1 | (2700.0, 12790.0] | Mountain | Cross Country Race | Carbon | 0.006579 | 0.018085 | 0.013578 | 0.000862 |

rad_final = rad_final.rename(columns={"Cluster 3":"High End Carbon Mountain Bike Customers"})

If you review your results and some of the clusters happened to be similar, it might be necessary to drop a few clusters and rerun the algorithm. In our case tha is not necessary, as generally good separations have been done. It is good to remember that the customer segmentation process can be performed with various clustering algorithms. In this post, we focused on k-means clustering

PCA is nothing more than an algorithm that takes numeric data in x, y, z coordinates and changes the coordinates to x’, y’, and z’ that maximize the linear variance.

How does this help in customer segmentation / community detection? Unlike k-means, PCA is not a direct solution. What PCA helps with is visualizing the essence of a data set. Because PCA selects PC’s based on the maximum linear variance, we can use the first few PC’s to describe a vast majority of the data set without needing to compare and contrast every single feature. By using PC1 and PC2, we can then visualize in 2D and inspect for clusters. We can also combine the results with the k-means groups to see what k-means detected as compared to the clusters in the PCA visualization.

If you want to scale the data, a scaler function is fine, if you want to scale and center the data then standardisation is the best. In practice we often ignore the shape of the distribution and just transform the data to center it by removing the mean value of each feature, then scale it by dividing non-constant features by their standard deviation. This is called standardisation.

from sklearn.pipeline import make_pipeline

import seaborn as sns

from sklearn.decomposition import PCA

from sklearn.preprocessing import StandardScaler

#pca2 = PCA(n_components=2)

pca2_results = make_pipeline(StandardScaler(),PCA(n_components=2)).fit_transform(rad.iloc[:,1:-(4+len(centroids))])

for i in range(pca2_results.shape[1]):

rad.iloc[:,1:-(4+len(centroids)):]["pca_"+str(i)] = pca2_results[:,i]

cmap = sns.cubehelix_palette(as_cmap=True)

f, ax = plt.subplots(figsize=(20,15))

points = ax.scatter(pca2_results[:,0], pca2_results[:,1],c=labels, s=50, cmap=cmap)

#c=df_2.TARGET,

f.colorbar(points)

plt.show()

### Each dot is a cycle shop

PCA can be a valuable cross-check to k-means for customer segmentation. While k-means got us close to the true customer segments, visually evaluating the groups using PCA helped identify a different customer segment, one that the [Math Processing Error] k-means solution did not pick up.

For customer segmentation, we can utilize network visualization to understand both the network communities and the strength of the relationships. Before we jump into network visualization

The first step to network visualization is to get the data organized into a cosine similarity matrix. A similarity matrix is a way of numerically representing the similarity between multiple variables similar to a correlation matrix. We’ll use Cosine Similarity to measure the relationship, which measures how similar the direction of a vector is to another vector. If that seems complicated, just think of a customer cosine similarity as a number that reflects how closely the direction of buying habits are related. Numbers will range from zero to one with numbers closer to one indicating very similar buying habits and numbers closer to zero indicating dissimilar buying habits.

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

cosine_similarity(rad.iloc[:,1:-(4+len(centroids))].T).shape

(30, 30)

cos_mat = pd.DataFrame(cosine_similarity(rad.iloc[:,1:-(4+len(centroids))].T), index=list(rad.iloc[:,1:-(4+len(centroids))].columns), columns=list(rad.iloc[:,1:-(4+len(centroids))].columns))

## Make diagonal zero

cos_mat.values[[np.arange(len(cos_mat))]*2] = 0

def front(self, n):

return self.iloc[:, :n]

pd.DataFrame.front = front

cos_mat.head(5).front(5)

| Albuquerque Cycles | Ann Arbor Speed | Austin Cruisers | Cincinnati Speed | Columbus Race Equipment | |

|---|---|---|---|---|---|

| Albuquerque Cycles | 0.000000 | 0.619604 | 0.594977 | 0.544172 | 0.582015 |

| Ann Arbor Speed | 0.619604 | 0.000000 | 0.743195 | 0.719272 | 0.659216 |

| Austin Cruisers | 0.594977 | 0.743195 | 0.000000 | 0.594016 | 0.566944 |

| Cincinnati Speed | 0.544172 | 0.719272 | 0.594016 | 0.000000 | 0.795889 |

| Columbus Race Equipment | 0.582015 | 0.659216 | 0.566944 | 0.795889 | 0.000000 |

It’s a good idea to prune the tree before we move to graphing. The network graphs can become quite messy if we do not limit the number of edges. We do this by reviewing the cosine similarity matrix and selecting an edgeLimit, a number below which the cosine similarities will be replaced with zero. This keeps the highest ranking relationships while reducing the noise. We select 0.70 as the limit, but typically this is a trial and error process. If the limit is too high, the network graph will not show enough detail.

edgeLimit = 0.7

cos_mat = cos_mat.applymap(lambda x: 0 if x <edgeLimit else x)

cos_mat.head(5).front(5)

| Albuquerque Cycles | Ann Arbor Speed | Austin Cruisers | Cincinnati Speed | Columbus Race Equipment | |

|---|---|---|---|---|---|

| Albuquerque Cycles | 0.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Ann Arbor Speed | 0.0 | 0.000000 | 0.743195 | 0.719272 | 0.000000 |

| Austin Cruisers | 0.0 | 0.743195 | 0.000000 | 0.000000 | 0.000000 |

| Cincinnati Speed | 0.0 | 0.719272 | 0.000000 | 0.000000 | 0.795889 |

| Columbus Race Equipment | 0.0 | 0.000000 | 0.000000 | 0.795889 | 0.000000 |

import igraph

from scipy.cluster.hierarchy import dendrogram, linkage

## I think the diagonal creates the one drpop

Z = linkage(cos_mat, 'ward')

/Users/dereksnow/anaconda/envs/py36/lib/python3.6/site-packages/ipykernel/__main__.py:2: ClusterWarning: scipy.cluster: The symmetric non-negative hollow observation matrix looks suspiciously like an uncondensed distance matrix

from ipykernel import kernelapp as app

cos_mat.drop_duplicates().shape

(30, 30)

from scipy.cluster.hierarchy import cophenet

from scipy.spatial.distance import pdist

c, coph_dists = cophenet(Z, pdist(cos_mat))

#list(cos_mat.as_matrix())

cos_mat

| Albuquerque Cycles | Ann Arbor Speed | Austin Cruisers | Cincinnati Speed | Columbus Race Equipment | Dallas Cycles | Denver Bike Shop | Detroit Cycles | Indianapolis Velocipedes | Ithaca Mountain Climbers | ... | Philadelphia Bike Shop | Phoenix Bi-peds | Pittsburgh Mountain Machines | Portland Bi-peds | Providence Bi-peds | San Antonio Bike Shop | San Francisco Cruisers | Seattle Race Equipment | Tampa 29ers | Wichita Speed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Albuquerque Cycles | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.700233 | 0.000000 | 0.000000 | ... | 0.000000 | 0.730533 | 0.000000 | 0.707184 | 0.721538 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Ann Arbor Speed | 0.000000 | 0.000000 | 0.743195 | 0.719272 | 0.000000 | 0.000000 | 0.000000 | 0.738650 | 0.756429 | 0.000000 | ... | 0.000000 | 0.773410 | 0.000000 | 0.721959 | 0.782233 | 0.000000 | 0.000000 | 0.704031 | 0.000000 | 0.000000 |

| Austin Cruisers | 0.000000 | 0.743195 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.752929 | 0.000000 | ... | 0.717374 | 0.771772 | 0.000000 | 0.746299 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Cincinnati Speed | 0.000000 | 0.719272 | 0.000000 | 0.000000 | 0.795889 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.829649 | 0.000000 | 0.000000 | 0.807522 |

| Columbus Race Equipment | 0.000000 | 0.000000 | 0.000000 | 0.795889 | 0.000000 | 0.000000 | 0.000000 | 0.704296 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.778459 | 0.000000 | 0.000000 | 0.748018 |

| Dallas Cycles | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.743126 | 0.749603 | 0.000000 | 0.000000 | ... | 0.000000 | 0.768081 | 0.000000 | 0.754692 | 0.756797 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Denver Bike Shop | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.743126 | 0.000000 | 0.784315 | 0.000000 | 0.739835 | ... | 0.000000 | 0.873259 | 0.000000 | 0.855332 | 0.795989 | 0.728577 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Detroit Cycles | 0.700233 | 0.738650 | 0.000000 | 0.000000 | 0.704296 | 0.749603 | 0.784315 | 0.000000 | 0.000000 | 0.000000 | ... | 0.702683 | 0.833591 | 0.000000 | 0.836725 | 0.793167 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Indianapolis Velocipedes | 0.000000 | 0.756429 | 0.752929 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.714849 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Ithaca Mountain Climbers | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.739835 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.814699 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.766426 | 0.000000 |

| Kansas City 29ers | 0.709368 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.746897 | 0.957257 | 0.788795 | 0.000000 | 0.738281 | ... | 0.704455 | 0.885133 | 0.000000 | 0.863437 | 0.826441 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Las Vegas Cycles | 0.000000 | 0.000000 | 0.000000 | 0.819100 | 0.793809 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.798941 | 0.000000 | 0.000000 | 0.857356 |

| Los Angeles Cycles | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.765069 | 0.701997 | 0.000000 | 0.000000 | ... | 0.000000 | 0.813920 | 0.000000 | 0.803344 | 0.729771 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Louisville Race Equipment | 0.000000 | 0.000000 | 0.000000 | 0.858299 | 0.780713 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.753219 | 0.000000 | 0.000000 | 0.819657 |

| Miami Race Equipment | 0.000000 | 0.887012 | 0.777433 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.766868 | 0.746176 | 0.000000 | ... | 0.715803 | 0.843452 | 0.000000 | 0.793937 | 0.788819 | 0.000000 | 0.000000 | 0.747068 | 0.000000 | 0.000000 |

| Minneapolis Bike Shop | 0.700426 | 0.744016 | 0.736987 | 0.000000 | 0.000000 | 0.780322 | 0.802102 | 0.780967 | 0.000000 | 0.000000 | ... | 0.708330 | 0.895092 | 0.000000 | 0.852946 | 0.833356 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Nashville Cruisers | 0.000000 | 0.812436 | 0.753784 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.737619 | 0.700912 | 0.000000 | ... | 0.000000 | 0.798585 | 0.000000 | 0.773644 | 0.733613 | 0.000000 | 0.000000 | 0.726407 | 0.000000 | 0.000000 |

| New Orleans Velocipedes | 0.000000 | 0.850807 | 0.809239 | 0.721739 | 0.000000 | 0.705624 | 0.000000 | 0.783405 | 0.780344 | 0.000000 | ... | 0.000000 | 0.836748 | 0.000000 | 0.808124 | 0.773396 | 0.000000 | 0.703193 | 0.811988 | 0.000000 | 0.000000 |

| New York Cycles | 0.000000 | 0.731991 | 0.708396 | 0.000000 | 0.000000 | 0.000000 | 0.787456 | 0.753576 | 0.000000 | 0.000000 | ... | 0.701388 | 0.849327 | 0.000000 | 0.816018 | 0.771974 | 0.000000 | 0.000000 | 0.722622 | 0.000000 | 0.000000 |

| Oklahoma City Race Equipment | 0.000000 | 0.875217 | 0.823806 | 0.714466 | 0.000000 | 0.000000 | 0.000000 | 0.782434 | 0.771407 | 0.000000 | ... | 0.705122 | 0.857586 | 0.000000 | 0.828342 | 0.798605 | 0.715004 | 0.000000 | 0.805118 | 0.000000 | 0.000000 |

| Philadelphia Bike Shop | 0.000000 | 0.000000 | 0.717374 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.702683 | 0.000000 | 0.000000 | ... | 0.000000 | 0.734866 | 0.000000 | 0.764986 | 0.000000 | 0.720375 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Phoenix Bi-peds | 0.730533 | 0.773410 | 0.771772 | 0.000000 | 0.000000 | 0.768081 | 0.873259 | 0.833591 | 0.714849 | 0.000000 | ... | 0.734866 | 0.000000 | 0.000000 | 0.913740 | 0.879047 | 0.740801 | 0.000000 | 0.722206 | 0.000000 | 0.000000 |

| Pittsburgh Mountain Machines | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.814699 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.716919 | 0.000000 |

| Portland Bi-peds | 0.707184 | 0.721959 | 0.746299 | 0.000000 | 0.000000 | 0.754692 | 0.855332 | 0.836725 | 0.000000 | 0.000000 | ... | 0.764986 | 0.913740 | 0.000000 | 0.000000 | 0.815651 | 0.760300 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Providence Bi-peds | 0.721538 | 0.782233 | 0.000000 | 0.000000 | 0.000000 | 0.756797 | 0.795989 | 0.793167 | 0.000000 | 0.000000 | ... | 0.000000 | 0.879047 | 0.000000 | 0.815651 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| San Antonio Bike Shop | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.728577 | 0.000000 | 0.000000 | 0.000000 | ... | 0.720375 | 0.740801 | 0.000000 | 0.760300 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| San Francisco Cruisers | 0.000000 | 0.000000 | 0.000000 | 0.829649 | 0.778459 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.779465 |

| Seattle Race Equipment | 0.000000 | 0.704031 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.722206 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Tampa 29ers | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.766426 | ... | 0.000000 | 0.000000 | 0.716919 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| Wichita Speed | 0.000000 | 0.000000 | 0.000000 | 0.807522 | 0.748018 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.779465 | 0.000000 | 0.000000 | 0.000000 |

30 rows × 30 columns

Z.shape

(29, 4)

plt.figure(figsize=(25, 10))

plt.title('Hierarchical Clustering Dendrogram')

plt.xlabel('sample index')

plt.ylabel('distance')

dendrogram(

Z,

leaf_rotation=90., # rotates the x axis labels

leaf_font_size=8., # font size for the x axis labels

labels = list(cos_mat.columns),

)

plt.show()

## You can create a reasonable argument for 4 - 6 clusters.

cos_mat["Detroit Cycles"].sort_values(ascending=False)

Portland Bi-peds 0.836725

Phoenix Bi-peds 0.833591

Providence Bi-peds 0.793167

Kansas City 29ers 0.788795

Denver Bike Shop 0.784315

New Orleans Velocipedes 0.783405

Oklahoma City Race Equipment 0.782434

Minneapolis Bike Shop 0.780967

Miami Race Equipment 0.766868

New York Cycles 0.753576

Dallas Cycles 0.749603

Ann Arbor Speed 0.738650

Nashville Cruisers 0.737619

Columbus Race Equipment 0.704296

Philadelphia Bike Shop 0.702683

Los Angeles Cycles 0.701997

Albuquerque Cycles 0.700233

Louisville Race Equipment 0.000000

Las Vegas Cycles 0.000000

Tampa 29ers 0.000000

Ithaca Mountain Climbers 0.000000

Indianapolis Velocipedes 0.000000

Detroit Cycles 0.000000

Pittsburgh Mountain Machines 0.000000

San Antonio Bike Shop 0.000000

San Francisco Cruisers 0.000000

Cincinnati Speed 0.000000

Austin Cruisers 0.000000

Seattle Race Equipment 0.000000

Wichita Speed 0.000000

Name: Detroit Cycles, dtype: float64

- horizontal lines are cluster merges

- vertical lines tell you which clusters/labels were part of merge forming that new cluster

- heights of the horizontal lines tell you about the distance that needed to be “bridged” to form the new cluster

In case you’re wondering about where the colors come from, you might want to have a look at the color_threshold argument of dendrogram(), which as not specified automagically picked a distance cut-off value of 70 % of the final merge and then colored the first clusters below that in individual colors.

#### Supposedly, you can do some network analysis here. Of course, you are not sure how.

cos_igraph = igraph.Graph.Adjacency(list(cos_mat.as_matrix()),mode = 'undirected')

cos_bet = igraph.Graph.edge_betweenness(cos_igraph)

cos_bet

plt.title('Hierarchical Clustering Dendrogram')

plot_dendrogram(cos_igraph)

plt.show()

## Possibly use this for future network:

https://python-graph-gallery.com/327-network-from-correlation-matrix/